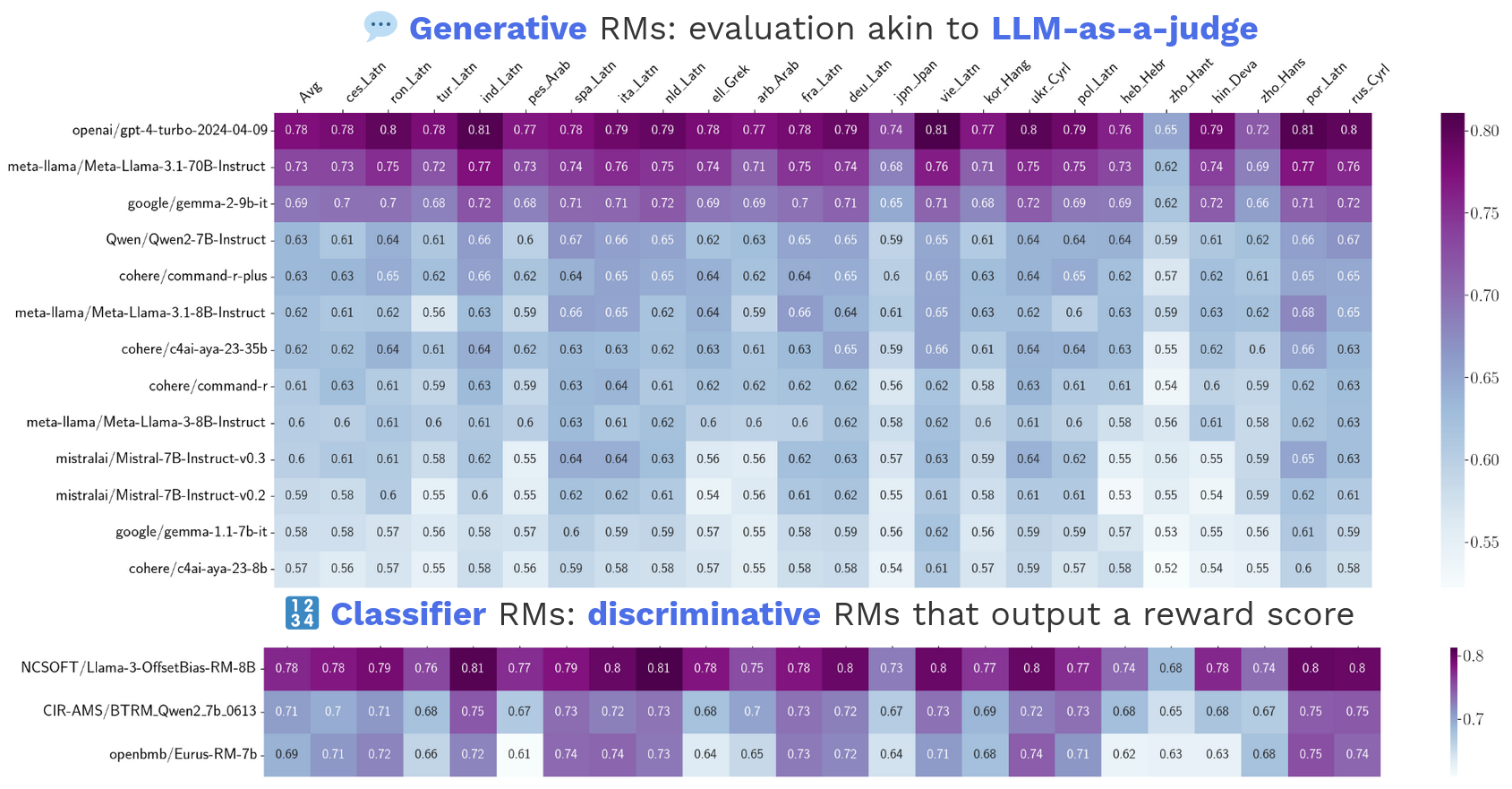

Evaluating Multilingual Reward Models

Developing a multilingual evaluation framework for diverse reward models with appropriate metrics, datasets and baselines.

RewardBench is the only established benchmark available that directly evaluates RM capabilities. Even so, RewardBench primarily focuses on English, and does not capture multilingual capabilities.

To address this gap, we translated RewardBench on 23 languages (same set as Aya-23). Our evaluation covers diverse model families, sizes and versions.